Supervisor - Stop Subprocesses Entering Fatal State

A script that exits as a "failure" too many times, will be put into a FATAL state and supervisor will no longer attempt to relaunch it.

A script run is considered a failure if it exits with a non-expected exit code or if it doesn't run for the required minimum amount of time (default of 1 second).

Depending on your circumstances, this might be problematic.

Example Log

When this occurs, you check the logs in /var/log/supervisor/supervisord.log, you might see something like so:

...

2018-07-17 11:50:03,712 INFO exited: SyncScript (exit status 0; expected)

2018-07-17 11:50:04,714 INFO spawned: 'SyncScript' with pid 20968

2018-07-17 11:50:04,766 INFO exited: SyncScript (exit status 255; not expected)

2018-07-17 11:50:05,768 INFO spawned: 'SyncScript' with pid 20969

2018-07-17 11:50:05,831 INFO exited: SyncScript (exit status 255; not expected)

2018-07-17 11:50:07,834 INFO spawned: 'SyncScript' with pid 20970

2018-07-17 11:50:07,880 INFO exited: SyncScript (exit status 255; not expected)

2018-07-17 11:50:10,885 INFO spawned: 'SyncScript' with pid 20971

2018-07-17 11:50:10,991 INFO exited: SyncScript (exit status 255; not expected)

2018-07-17 11:50:11,993 INFO gave up: SyncScript entered FATAL state, too many start retries too quickly

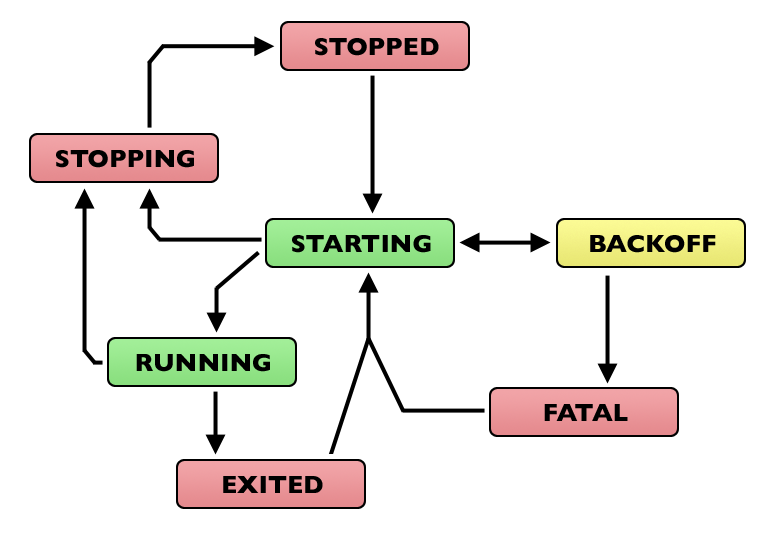

In case your interested, here is the Subprocess State Transition Graph taken directly from the docs.

Solution

One way to try and resolve the issue is to try and program your script/process to handle every possible situation and exit cleanly. This is probably the "proper" solution but requires a lot of effort and future updates may add more scenarios that don't get captured. Not to mention, "stuff happens" and there will likely be scenarios you don't think of.

I believe it may be more pragmatic to configure supervisor differently. There are two configuration options mentioned in the supervisor configuration doc that we can use.

- exitcodes

- startretries

The startretries option (default 3) specifies how many times supervisor should try to restart the process before it enters a fatal state and supervisor gives up.

You could just set this to an absurdly large number to the point where it is almost infinite. For example, have your script sleep for 3 seconds at the start and set this to 9999.

This would result in supervisor retrying for over 8 hours before it gives up. By then you have probably noticed/fixed the underlying issue, such as your database being down and your script being unable to connect.

The exitcodes option (default 0,2) specifies the exit codes that supervisor should accept as valid, and not cause the script execution to be a failure.

You could change this list to include all of your erroneous exit codes. I noticed that my PHP script in the log was exiting with code 255, so I just need to add that to the list.

Once you add the exitcodes to your configuration, when the same error occurs now, you should see expected in the log:

2018-07-18 09:44:33,809 INFO success: SyncScript entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2018-07-18 09:44:35,929 INFO exited: SyncScript (exit status 255; expected)

A Note About The "Backoff" State And Minimum Running Time

I also tested with just relying on the startretries option and was surprised to see that supervisor did not enter a backing off state, which would result in it taking longer and longer before retrying to start the process.

After re-reading the documentation it became clear why:

When an autorestarting process is in the BACKOFF state, it will be automatically restarted by supervisord. It will switch between STARTING and BACKOFF states until it becomes evident that it cannot be started because the number of startretries has exceeded the maximum, at which point it will transition to the FATAL state. Each start retry will take progressively more time..

BACKOFF - The process entered the STARTING state but subsequently exited too quickly to move to the RUNNING state.

So in summary, the BACKOFF state does not apply to a script returning an incorrect error code, but a script not running for the minimum amount of time (startsecs).

This seems counterintuitive to me but that's the way it is.

So even if your script executed successfully, if it doesn't run for the minimum amount of time, it will enter a backing off state, causing it to be run less frequently, and eventually enter a FATAL state.

To stop this, you may wish to manually set startsecs to 0 instead of the default of 1, or simply put something like a sleep(3) at the end of your script.

Calculating Total Time

Since supervisor adds one second to the delay before each retry attempt, the total time until supervisor will stop spawning the process is equal to the triangular numbers sequence. This can be calculated by running:

n * (n+1)/2

To give an example, startretries being set to 1000 would result in supervisor retrying for at least 5.79 days before giving up.

References

- Supervisor Docs - Subprocess - process states

- Advanced Bash-Scripting Guide: Appendix E. Exit Codes With Special Meanings

- supervisor-users.10397.x6.nabble.com - [Supervisor-users] infinite startup retries?

First published: 16th August 2018