ZFS - Add Intent Log Device

You can add a dedicated storage device to your ZFS RAID array to act as your ZFS Intent Log (or ZIL). This device is often referred to as the Separate Intent Log (SLOG). The ZIL is where all the of data to be written is stored, and then later flushed as a transactional write to your much slower spinning disks. This means that for any application that requires data to be fully written to disk before carrying on, such as a database, should become much faster, as it only has to wait for the data to be written to a much faster SSD, rather than your slower spinning disks.

Related Posts

Steps

Run the command below to add a device to act as your ZIL to your pool .

sudo zpool add -f [pool name] log /dev/sd[x]

In an enterprise space, you may want to mirror your intent log for redundancy.

sudo zpool add -f [pool name] log mirror /dev/sd[x] /dev/sd[x2]

Checking Successful

You can check that your log device was added correctly by running sudo zpool status.

pool: zpool1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

zpool1 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sda ONLINE 0 0 0

sdc ONLINE 0 0 0

logs

sdb ONLINE 0 0 0

Removing Log Device

If you change your mind and want to remove the ZIL, just tell ZFS that you want to remove the device.

sudo zpool remove [pool name] [device name]

For example

sudo zpool remove zpool1 ata-Samsung_SSD_850_EVO_500GB_S3R3NF0J848724N-part1

Benchmarking

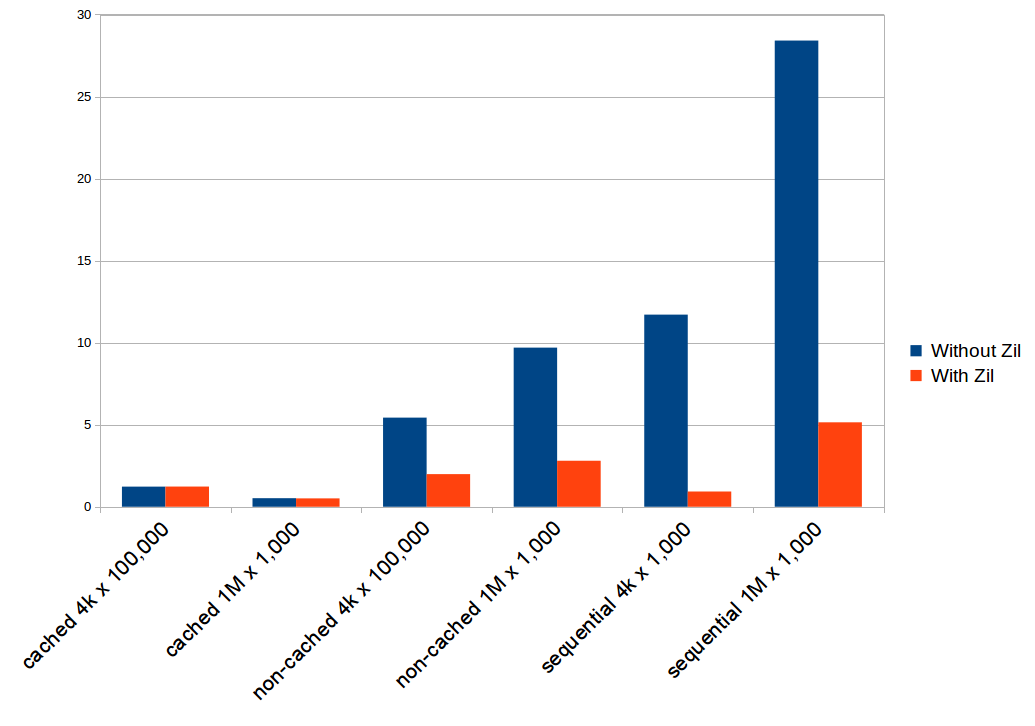

I was keen to see how much effect adding a ZIL would have on my test setup of two WD 3TB Red drives in a mirror. Below are the results of my disk-write benchmarking script which you can get in the appendix.

As you can see, the ZIL has a massive impact on improving write performance in any scenarios where your application waits to make sure the data has been written to disk. This includes databases, iSCSI, and if your NFS is set to synchronous rather than asynchronous for safety.

Appendix

Benchmarking Script

#!/bin/bash

echo "Performing cached write of 100,000 4k blocks..."

/usr/bin/time -f "%e" sh -c 'dd if=/dev/zero of=4k-test.img bs=4k count=100000 2> /dev/null'

rm 4k-test.img

echo ""

sleep 3

echo "Performing cached write of 1,000 1M blocks..."

/usr/bin/time -f "%e" sh -c 'dd if=/dev/zero of=1GB.img bs=1M count=1000 2> /dev/null'

rm 1GB.img

echo ""

sleep 3

echo "Performing non-cached write of 100,000 4k blocks..."

/usr/bin/time -f "%e" sh -c 'dd if=/dev/zero of=4k-test.img bs=4k count=100000 conv=fdatasync 2> /dev/null'

rm 4k-test.img

echo ""

sleep 3

echo "Performing non-cached write of 1,000 1M blocks..."

/usr/bin/time -f "%e" sh -c 'dd if=/dev/zero of=1GB.img bs=1M count=1000 conv=fdatasync 2> /dev/null'

rm 1GB.img

echo ""

sleep 3

echo "Performing sequential write of 1,000 4k blocks..."

/usr/bin/time -f "%e" sh -c 'dd if=/dev/zero of=4k-test.img bs=4k count=1000 oflag=dsync 2> /dev/null'

rm 4k-test.img

echo ""

sleep 3

echo "Performing sequential write of 1,000 1M blocks..."

/usr/bin/time -f "%e" sh -c 'dd if=/dev/zero of=1GB.img bs=1M count=1000 oflag=dsync 2> /dev/null'

rm 1GB.img

References

- ZFS Build - HowTo : Add Log Drives (ZIL) to Zpool

- FreeNAS - The ZFS ZIL and SLOG Demystified

- 45drives.com - FreeNAS - What is ZIL & L2ARC

- ix Systems - To SLOG or not to SLOG: How to best configure your ZFS Intent Log

First published: 16th August 2018