ZFS Record Size

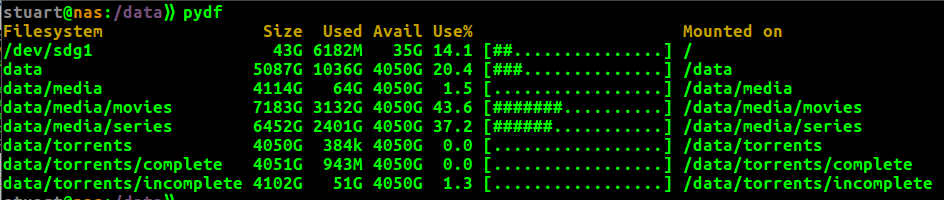

Whilst watching a discussion between Allan Jude and Wendell about ZFS, the topic of using the appropriate record size came up. I was intrigued and immediately started breaking up my 6 x 3TB RAIDz2 NAS into multiple datasets.

Creating a dataset is as easy as

sudo zfs create pool/dataset-name

I then used the following command to set what I thought was the appropriate record size for the different data types.

sudo zfs set recordsize=[size] data/media/series

So for things like the movies and series datasets, I set a size of 1 mebibyte.

sudo zfs set recordsize=1M data/media/series

whereas for the incomplete torrents, I set it to just 64K.

sudo zfs set recordsize=64K data/media/series

Since having made the change, it feels like my media content loads more quickly when launching a video for the first time in a while into mpv (the second time results in no disk activity and is much faster, indicating it loads from cache). However, I definitely noticed the massive performance hit I took when setting up my datasets, every dataset is treated as a different filesystem, so copying files into a dataset results in a massive copy operation which is quite slow on RAIDz2 on an old AMD FX chip and spinning rust. I also now get a penalty when moving files from one dataset to another, such as when torrents get moved from incomplete to complete. I'm hoping that this has resulted in very low fragmentation, but I don't know how to tell. I think this will be worth it in the end when one day I will have to export my data onto another ZFS system using zfs send when the NAS is filled.

Some Facts About Record Size

- The default record size for ZFS is 128K.

- Changing the record size will only have an effect on new files. It will not have any effect on existing files..

- All files are stored either as a single block of varying sizes (up to the recordsize) or using multiple recordsize blocks.

- This suggests to me that using a block size of 1M will not cause your 1024 text files to eat up 1 Gibibyte of disk space.

- Your file's block size will never be greater than the record size, but can be smaller.

- The blocksize is the size that ZFS validates through checksums.

Performance Impacts

Tuning the record size should not have much effect on burst workloads of 5 seconds or less, or those workloads that are sequential in nature.

Early storage bandwidth saturation can occur when using overly large record sizes. If an application such as an Innodb database, requests 16K of data, then fetching a 128K block/record doesn't change the latency that the application sees but will waste bandwidth on the disk's channel. A 100 MB channel could handle just under 800 requests at 128k, or it could handle a staggering 6,250 random 16k requests. This is an important factor as you probably don't want to gimp your MySQL database to under 800 iops.

Another factor, is that a partial write to an uncached block will require the system to go fetch the rest of the block's data from disk before the block can be updated. Otherwise how is ZFS going to know what the new checksum should be? However, if your blocks are smaller, then updates are likely to be at least an entire block or more in size. These "full" blocks can be written without needing to fetch data first.

Before you go ahead and reduce your block size to something tiny, like 4K, there are good reasons for having larger block sizes. Having larger blocks means there are less blocks that need to be written in order to write a large piece of data, such as a movie. Less blocks means less metadata to write/manage. Many of the aspects of the ZFS filesystem, such as caching, compression, checksums, and de-duplication work on a block level, so having a larger block size are likely to reduce their overheads. For example, with regards to nop-write, if a backup operation tries to copy a 700 MiB film when using a block size of of 1MiB, ZFS will only have to check 700 checksums for changes. If the block size was 16KiB, then ZFS would have to check 44,800 checksums for differences.

Conclusion

If you're running a MySQL database on an SSD using ZFS, you should go change your datasets's record record size to 16k. If however, you are running a media server on traditional HDDs, then you are probably fine on the default 128k, or may wish to increase it to 1MB but don't expect any massive performance boost.

References

- Oracle - Tuning ZFS recordsize

- OpenZFS - Performance tuning

- Joyent - Bruning Questions: ZFS Record Size

- Programster's Blog - Allan Jude Interview with Wendell - ZFS Talk & More

First published: 16th August 2018