Hard and Soft Links

I've often thought there should be a relational database based filesystem in order to better organize my media collection, but it turns out all I needed was to understand hard links.

The Problem

There's a movie collection that has been sorted into folders by year. That way one can easily access the latest films and the list never grows too large. However, you and your friends have "marathon nights" where you will watch every episode of Star Wars or Batman in order. It would be great to have another folder called Batman where each of the appropriate movies, which came out in different years, can be opened from. However, one doesn't want to use soft links because if one moves the original files around or renames them, the links will break and it becomes too much effort to maintain. If one was to just copy the files, it would be a massive waste disk space.

Hard And Soft Links

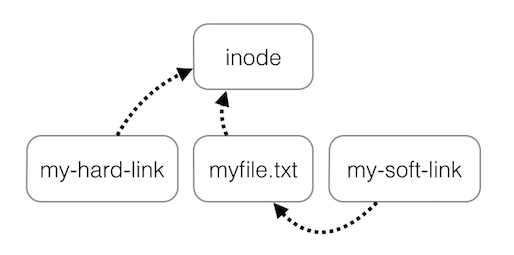

Hard and soft links are basically "pointers". They point your system somewhere to fetch data. Below is a picture that helps explain the difference between a hard and soft links in terms of how they point.

As you can see, all your filenames are essentially hard links, but there is usually just one of them. All your actual data (the contents of the files when you open them) is accessed through inodes which tell the system which blocks on disk to look at etc. When you create a file in your filesystem, called myfile.txt, myfile.txt will point to the inode which points to the blocks which hold the content. The myfile.txt name is just a metadata pointer separate to the actual content.

When you create a soft link (in this case called "my-soft-link", you are just creating a pointer to that filename, which itself is a pointer to the inode. If you were to move the location of the my-soft-link to another directory, or were to rename it, then the pointer to that file (the symlink) will no longer work as the destination no longer exists.

When you create a hard link, you are creating another file name that points to the same inode rather than the other file name. The new file name can be called anything and be located anywhere, within the same filesystem. Since both filenames are pointers to the inode, rather than one to the other, both filenames can move around to other directories, or be renamed without affecting each other. Since the hard links are just a pointer to the underlying data they have two major advantages over just copying the original file. * They are instant to create * They take up an inconsequential amount of disk space (a few bytes no matter the filesize).

Disadvantages of Hard Links

Most tools, such as ls, ncdu, rsync, and fdupes, have in-built support/arguments for handling symlinks, but may or may not support handling hard links as can be seen in the sections below. Also, even if you make one of the files read-only or immutable, if there is a hard link to the same content that is not read-only, the content can be edited through that.

So far, I've only talked about linking files and not directories. You can soft link directories, but hard linking them is not possible for very good reasons. For more information, refer to a post on Ask Ubuntu and a post on Unix & Linux.

Ncdu vs ls

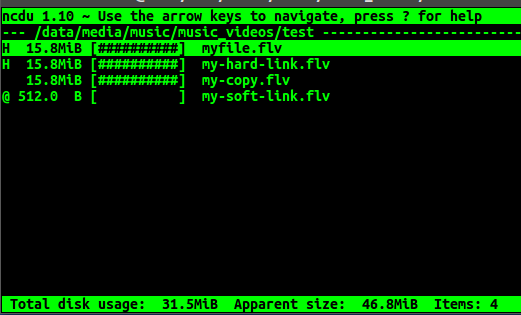

Ncdu is really good at handling hard links and will indicate them with an H beside them (the original is also considered a hard link now as there is no way to tell which was the original as all files are technically hard links).

As you can see the hard links and the copy all show up as the same size, but the soft link is only the the 512 bytes necessary to set it up. Ncdu cleverly shows that the files will look like 46.MiB in size, but the files really only take up 31.5 MiB on disk.

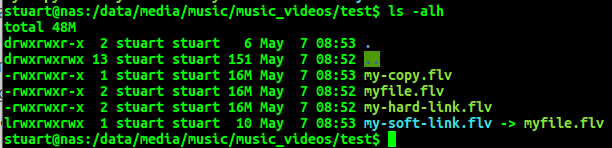

On the other hand, ls -alh (below) does not appear to support hard links at all but does clearly show/handle soft links. It shows just the one total, which is the sum of adding the file sizes and not how much space is actually being used on disk.

Fdupes and Hard Links

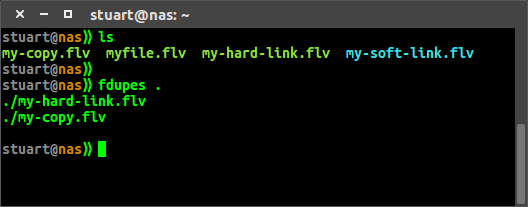

Fdupes has great support for hard links. By default it will not remove hard links as shown below:

However, you can use the -H argument to change this so it will consider hard links as a duplicate to remove.

Automatically Replace Copies With Hard Links

If you had previously created copies of your files and ignored the consequences it had on your disk space, you can claim back that disk space and leave your file structure exactly as it is by using jdupes with the -L or --linkhard argument, which will replace the copies with a hard link. If jdupes is not your thing, then there is a Unix & Linux post for exactly this problem in which someone has linked a perl script that does the job but I haven't tested any of these methods yet.

First published: 16th August 2018